Hi,

I'am Michele Tufano

Ph.D. Candidate in

Computer Vision

and AI

Contact

About

I'am Michele

Ph.D. Candidate at Human Nutrition and Health, Wageningen University and Research | Research Affiliate at Senseable City Lab, Massachusetts Institute of Technology

I have experience in computer vision and deep learning, with a focus on video classification to predict human eating behavior and count eating and drinking events.

I train and optimize deep learning models, design and implement algorithms for video analysis, and object and event recognition using OpenCV, PyTorch, TensorFlow, Keras, YOLO, and Mediapipe key points to track movements in Python.

In my free time, I enjoy playing basketball, hiking, and cooking Italian food.

Skills

AI and Computer Vision Engineer

I've always liked learning new things, especially about science and technology. I wasn’t a coding genius growing up. My interest in coding started unexpectedly during a Chemistry class during my Bachelor's Degree when the professor showed us how to use the terminal to run a program that visualized molecules. I was fascinated and decided to install Linux on my computer and started exploring programming on my own. That experience changed my path. I moved from working in labs to studying bioinformatics, and eventually into data science and AI. What started as curiosity has now become a real passion.

Projects

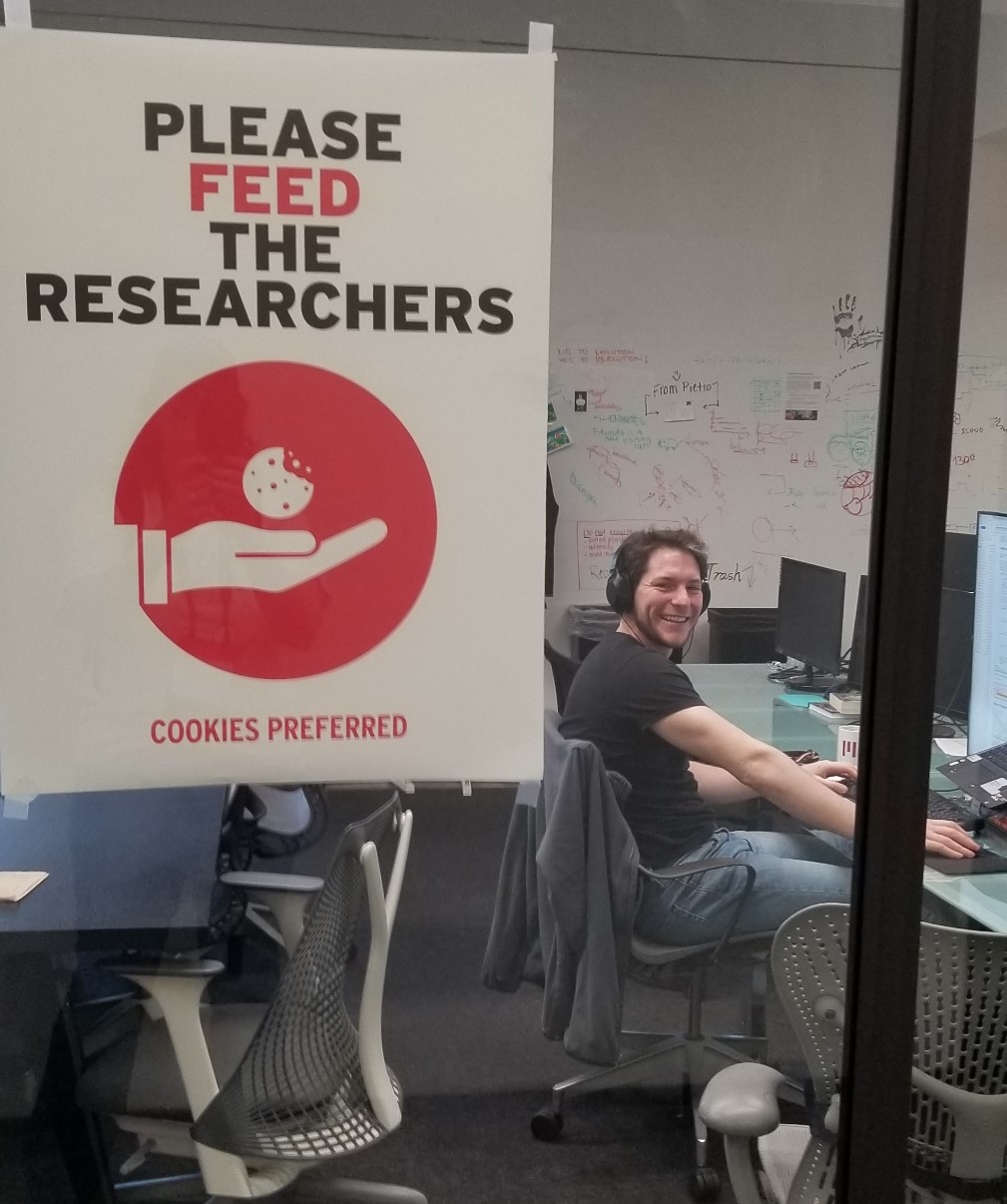

The Eatpol Toolkit Software

How to use AI to automate analysis in eating behavior?

I am developing software to track how fast people eat, count the number of bites, chews, and sips, and even measure who is the fastest researcher to eat a cookie! All without lifting a finger, fully automated analysis thanks to the deployment of computer vision models, and software engineering.

Eatpol

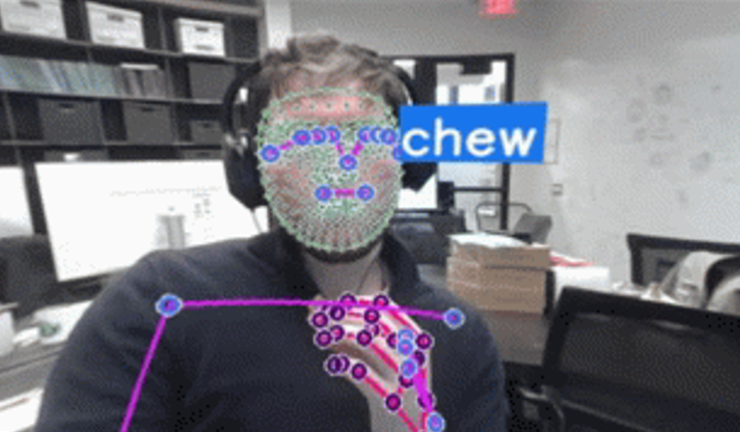

Can we count bites, chews, and sips from video?

For this project, I created a dataset from videos of people eating a meal and I trained video vision transformers in Pytorch to predict bites, chews, and sips.

The faster you eat, the more you eat!

This video classification model is useful for calculating how fast people eat and will be used by eating behavior scientists to develop strategies that can decrease the eating rate.

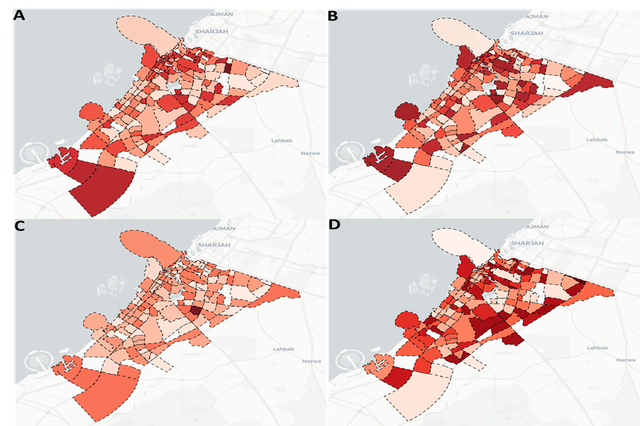

Foodpedia in Collaboration with MIT Senseable City Lab

How does the urban food environment impact health outcomes? Does the availability of certain foods in our neighborhoods affect our health? This research paper is currently under review in Nature Foods.

For this project, I used data mining, SQL databases, NLP, BERT, LLM, RAG, to analyze and quantify the nutritional content of foods across neighborhoods in Boston, London, and Dubai.

Bitecounter

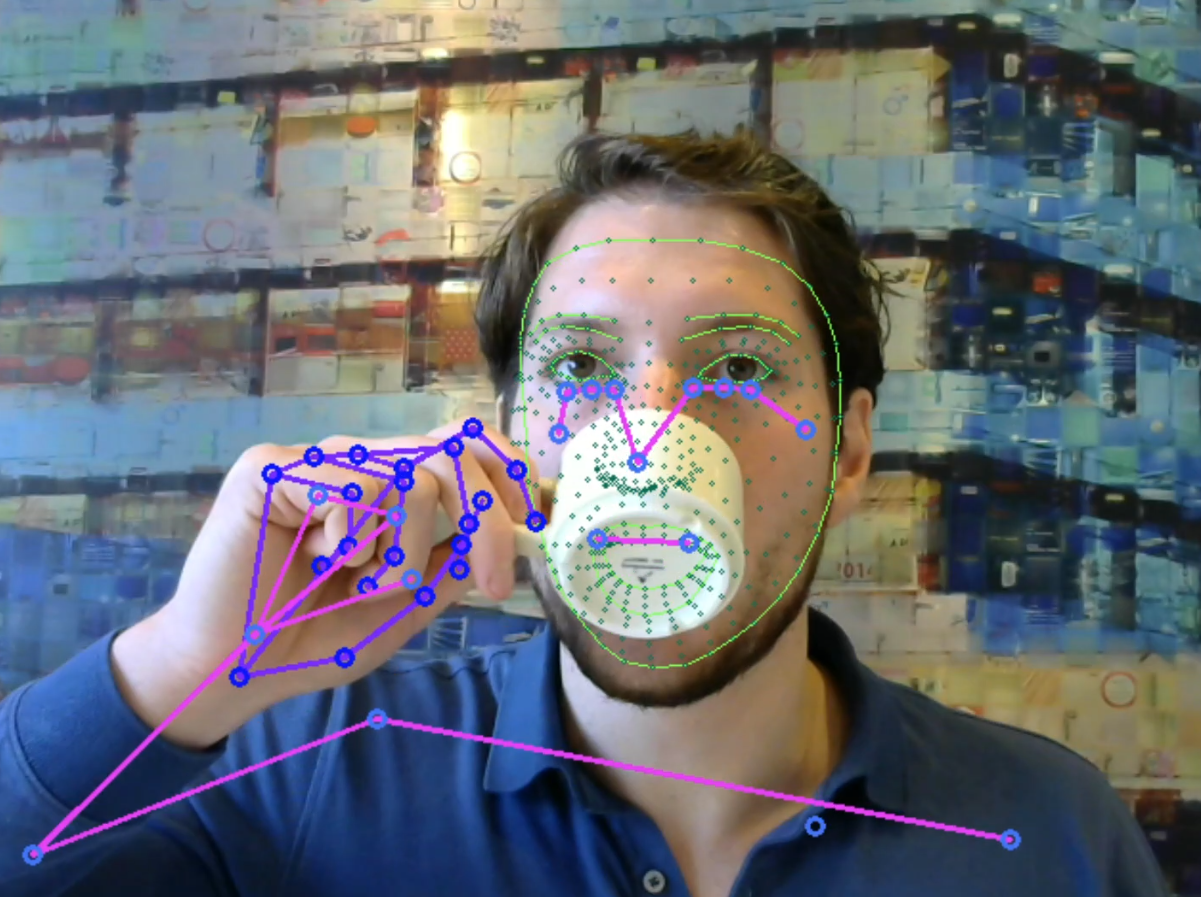

Can we use facial landmarks to count bites automatically?

I created a rule-based program that uses distances between facial landmarks to count bites from video files or in real time.

Check out the publication and the demo!

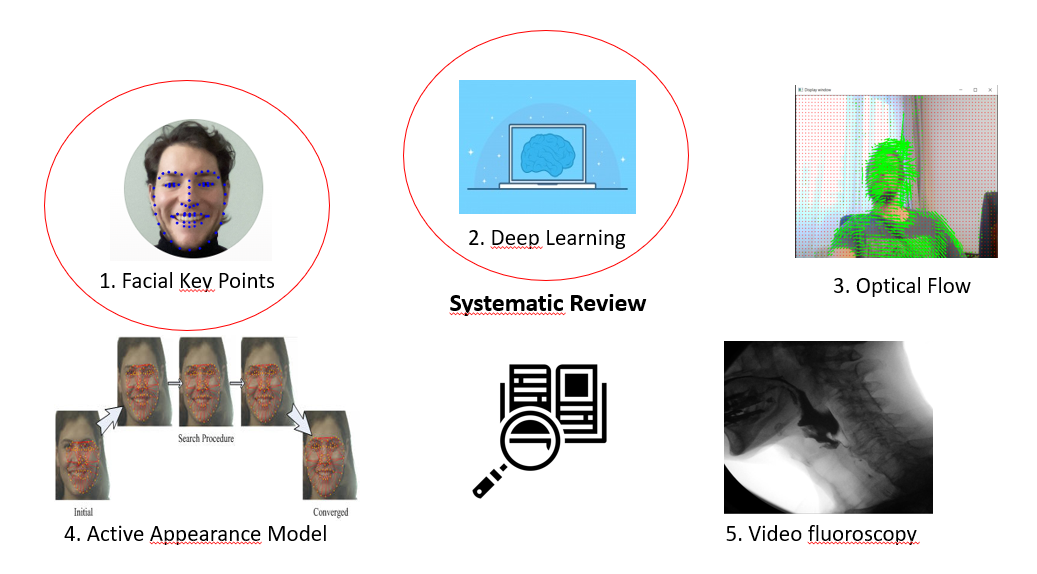

Systematic Review

What are the most promising methods to automate the eating behavior analysis?

I reviewed the scientific literature and found that facial landmarks and deep learning are the best methodologies to do so.

Check out the publication